AI tools can generate code faster than ever, but speed often breeds hallucinations. The industry is now realizing that the secret to reliable AI isn’t a smarter model, it’s better documentation.

We are currently navigating the “trough of disillusionment” with AI coding assistants. The initial magic of watching a bot write a generic Python script has faded, replaced by the daily frustration of debugging code that looks perfect but doesn’t actually work.

A recent observation from the team behind the Cursor editor captures this tension perfectly:

“While the automatic generation can be excessive, Cursor is designed to use documentation for context.”

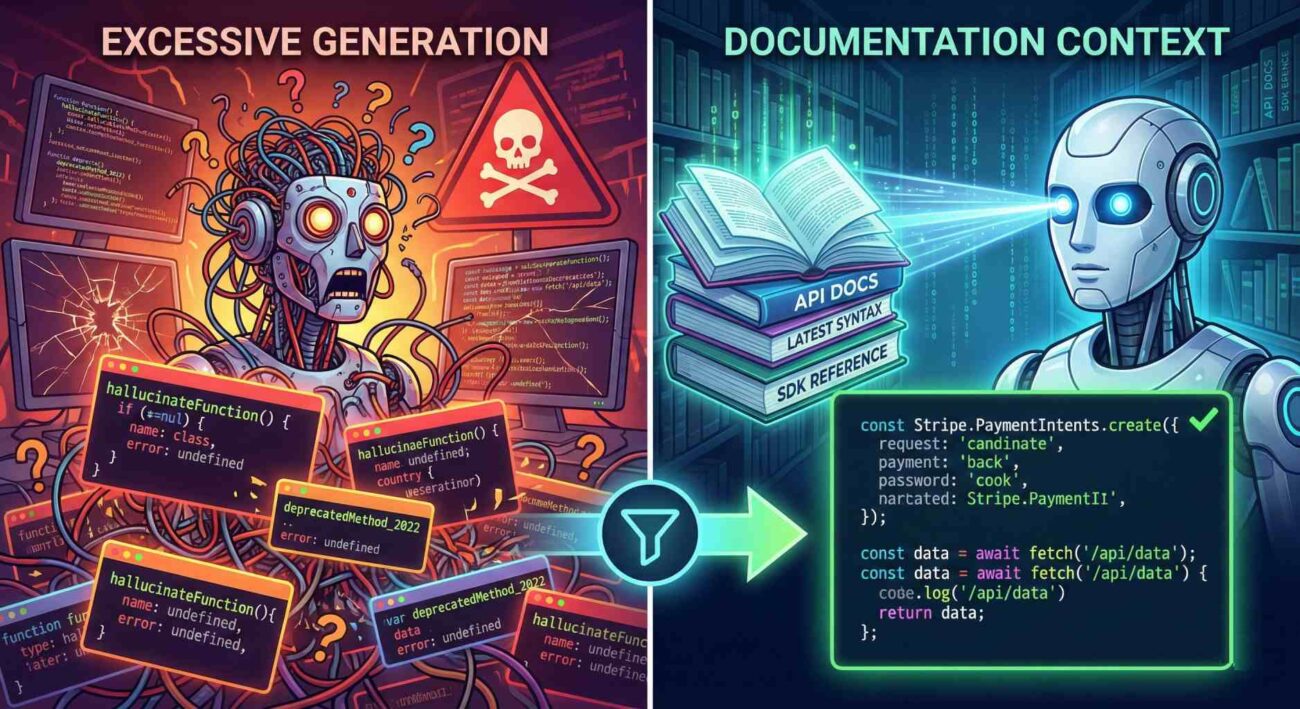

While this quote refers to a specific tool, it highlights a universal truth about the current state of generative AI in software development. We are trapped between “Excessive Generation” (the AI guessing too much) and the need for “Contextual Grounding” (the AI reading the manual).

Here is why this distinction defines the future of how we write code.

The Problem: The Hallucinating Junior Developer

When we say automatic generation is “excessive,” we are talking about the fundamental nature of Large Language Models (LLMs).

LLMs are enthusiastic pleasers. They are designed to predict the next likely token, not to verify truth. If you ask an AI to write code for a library that was updated last week, the AI will likely generate code using the syntax from two years ago (because that is what dominates its training data).

This leads to “excessive” output in inventing functions that don’t exist because they sound like they should exist, spaghetti code and confidence over accuracy.

In professional environments, this excessiveness becomes a liability. You spend less time writing code, but more time auditing the AI’s “creative writing.”

The Solution: Retrieval-Augmented Generation (RAG)

The industry answer to this problem is a concept called RAG, or Retrieval-Augmented Generation. In plain English, this simply means: “Don’t let the AI guess; make it look up the answer first.”

This is the “Using Documentation for Context” part of the equation.

The most advanced coding workflows today are no longer relying on the AI’s internal memory. Instead, modern tools allow developers to feed external documentation directly into the AI’s context window.

The Shift

This shift changes the role of the developer. We are moving away from “Prompt Engineering” (trying to trick the bot into being smart) toward Context Curation.

The skill of the future isn’t just knowing how to code; it’s knowing how to feed the machine the right information.

- Are you working with a private internal library? You need to feed the AI your internal READMEs.

- Are you using a bleeding-edge framework? You need to pipe the documentation URL into your IDE.

The quote holds true: Automatic generation is excessive. Left to their own devices, AI models are noisy and prone to drift.

The developers who will get the most out of AI in the coming years won’t be the ones using the smartest models. It will be the ones who understand that an AI is only as good as the documentation you give it.

If you want better code, stop asking the AI to remember. Start forcing it to read.