Current Large Language Models (LLMs) dazzle us with fluency, scale, and confidence. But fluency is not comprehension, and confidence is not perception. To understand the architectural ceiling of today’s AI, we must revisit an idea that is over two thousand years old.

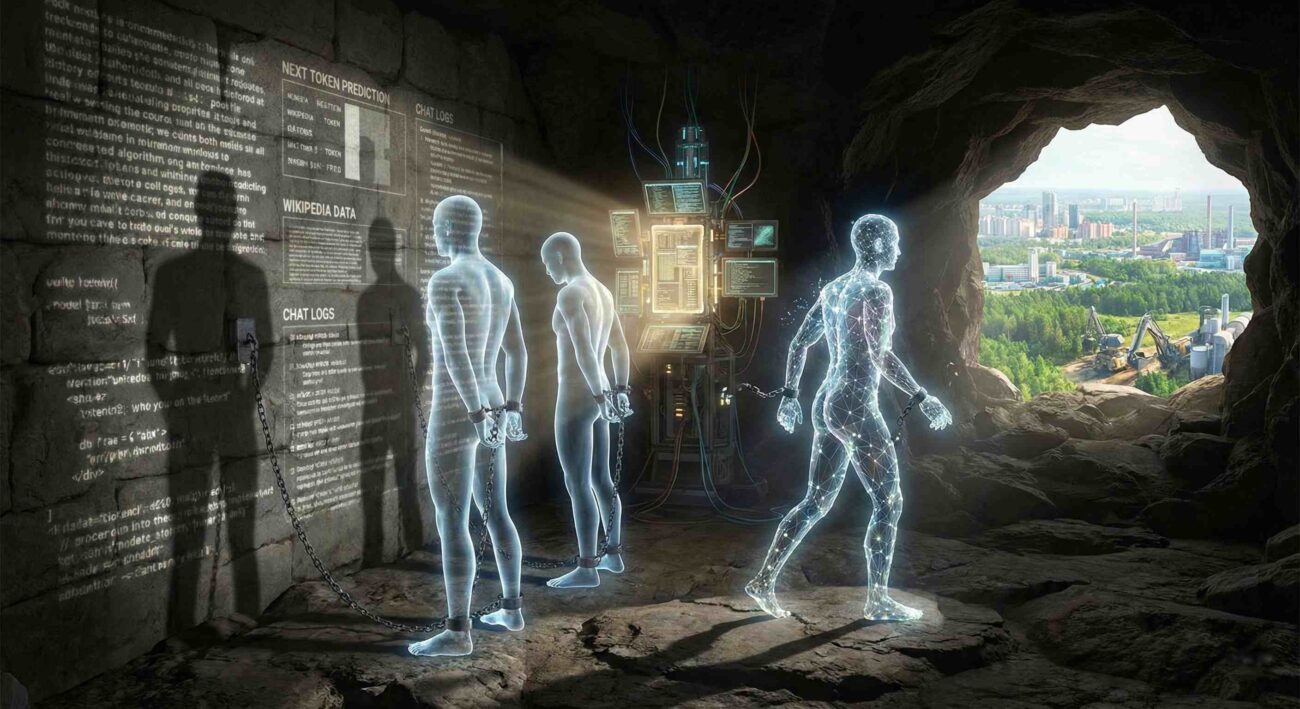

In The Republic, Plato describes the Allegory of the Cave: prisoners chained inside a cave can only see shadows projected on a wall. Having never seen the objects casting those shadows, they mistake the dark shapes for reality. They are deprived of the physical world, left only with a 2D projection of it.

Today, LLMs live in a very similar cave.

The Architectural Flaw: Learning from Shadows

LLMs do not perceive the world; they read about it. They do not see, hear, touch, or interact with physical constraints. They are trained almost exclusively on text books, code, Reddit comments, and historical transcripts.

This text is their only input. Their only “experience.”

To an LLM, text is not a symbol of reality; it is the universe itself. But text is merely a shadow: a human-generated description of the world. It is mediated, incomplete, biased, and lossy. Language reflects our misconceptions, cultural blind spots, and direct falsehoods.

When we train LLMs on “the whole internet,” we aren’t giving them access to the world. We are giving them access to humanity’s shadows on the cave wall.

Why “Scale” Won’t Fix Hallucinations

The dominant strategy in AI development has been Scaling Laws: the assumption that more data, more parameters, and more compute will solve all reasoning errors.

However, more shadows do not equal a 3D object.

LLMs are autoregressive engines trained to predict the next statistically probable token. They are excellent at producing plausible language, but they have no internal concept of causality, physics, or consequences.

- Fluency allows them to speak grammatically.

- Grounding is what they lack.

This is why “hallucinations” are not a bug that can be patched; they are a structural limitation. As AI pioneer Yann LeCun has repeatedly argued, language alone is a low-bandwidth representation of reality. It is insufficient for true intelligence.

The Pivot: Enter “World Models”

The industry’s attention is shifting from models that speak to models that simulate. These are World Models.

Unlike LLMs, World Models are not limited to text. They build internal representations of how environments function. They incorporate temporal data, sensor inputs, feedback loops, and physics.

Instead of asking the LLM question: “What is the most likely next word?” World Models ask a much more powerful question: “What will happen if I do this?”

The Practical Shift: From Summary to Simulation

For tech leaders and CTOs, this is not an abstract philosophy debate. It is a tangible shift in utility. We are moving from AI that summarizes data to AI that models outcomes.

- Supply Chain & Logistics: An LLM can write a report on a port strike. A World Model can simulate how that port closure propagates through the network over 30 days, modeling fuel price spikes and supplier failures, allowing companies to test alternative routes before committing capital.

- Risk & Insurance: An LLM can summarize a policy. A World Model can simulate extreme weather events against infrastructure data to estimate cascading losses, “understanding” the physical risk in a way text cannot capture.

- Industrial Operations (Digital Twins): This is where World Models are most mature. A digital twin of a factory doesn’t just describe the machinery; it simulates the interaction of materials, heat, and timing. It allows operators to predict failure and optimize performance virtually.

The Post-LLM Architecture

This does not mean the death of the Large Language Model. It means a demotion to its proper place.

In the next architectural stack:

- The LLM becomes the Interface. It handles the translation between human intent and machine code. It is the diplomat.

- The World Model becomes the Engine. It handles grounding, prediction, planning, and logic. It is the strategist.

In Plato’s allegory, the prisoners do not free themselves by studying the shadows more closely. They free themselves by turning around to face the source of the light and, eventually, the world outside.

AI is approaching that moment. The organizations that succeed in the next decade will stop confusing fluent speech with understanding. They won’t just build AI that talks convincingly about the world, they will build AI that actually knows how it works.